For optimal reading, please switch to desktop mode.

For many of our clients, running either Slurm or Kubernetes clusters is a key use case for their HPC infrastructure. Both are core components of differents types of modern HPC workloads, but their lack of interoperability means that running them on the same infrastructure will likely require creating and maintaining two separate clusters. SchedMD's Slinky project tries to address this by nesting Slurm within Kubernetes, allowing for a unified solution that allows Slurm to take advantage of Kubernetes' auto-scaling capabilities and efficiently allocates resources between the two.

StackHPC has been interested in packaging Slinky as an app to be exposed through our Azimuth self-service portal for some time, but as an app it poses some unique challenges. Slinky's component Helm charts rely on CRDs (custom resource definitions) from their dependencies to be present before they are installed. Helm's native dependency management has no way to gracefully handle this, as it attempts to install dependencies concurrently with the main chart, but assumes that all necessary CRDs are defined within the chart or prior to installation. This means Slinky cannot be installed as a single Helm chart as required by Azimuth's existing tooling for deploying Helm-based Kubernetes applications.

FluxCD and Helm Dependency Management

FluxCD is a tool for managing continuous deployment of Kubernetes resources. It wraps Helm charts in HelmRelease CRDs, management objects that Flux's controller uses to deploy charts from upstream repositories and can monitor and reconcile the state of their deployment. These HelmReleases allow for their own HelmRelease dependencies to be specified. Flux only installs a HelmRelease's chart when all of its dependencies are reporting as ready and healthy, allowing for Helm dependencies to be managed dynamically.

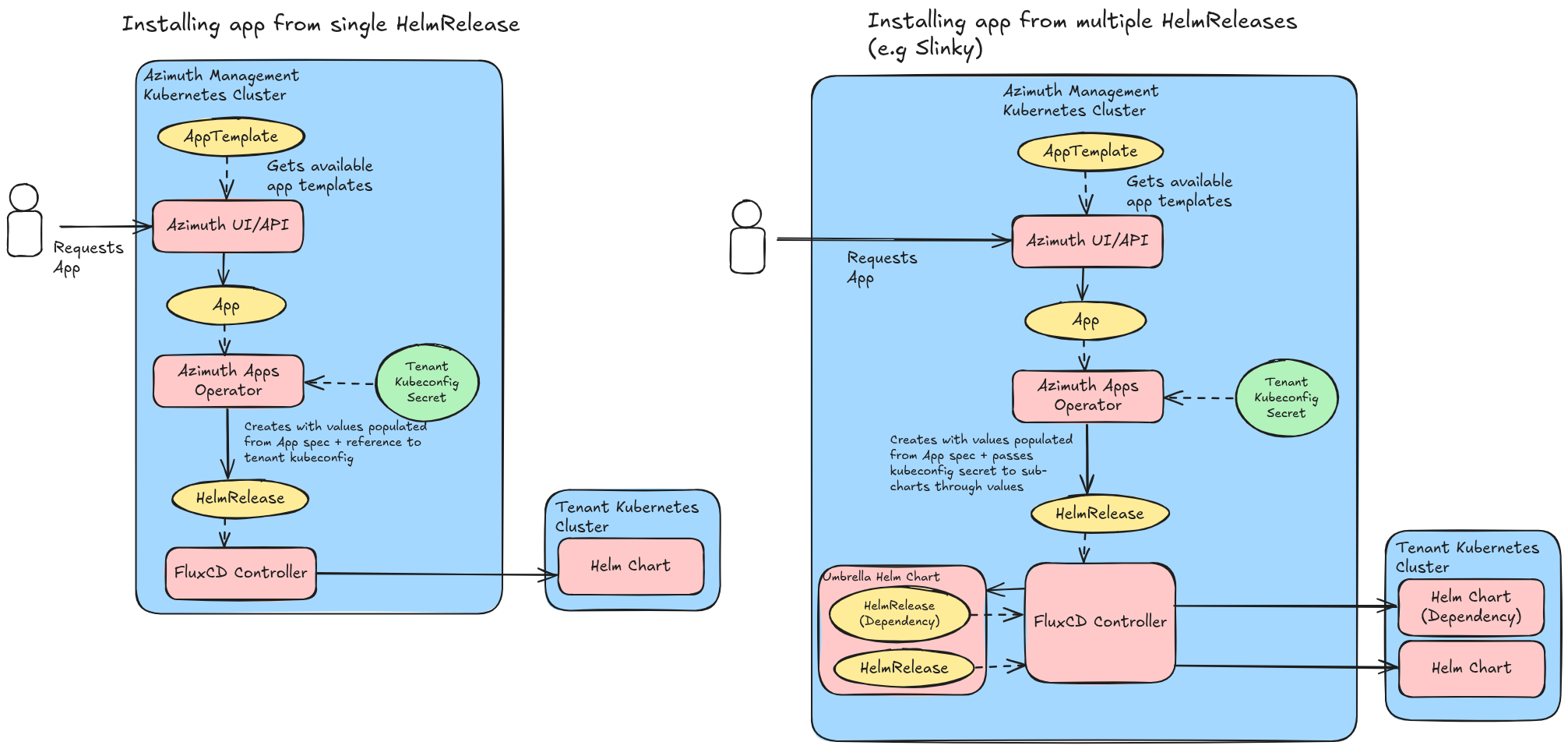

Flux forms the core of the new Azimuth apps operator, an addition to Azimuth designed as a replacement for existing vanilla Helm-based tooling for deploying self-service Kubernetes applications. It is currently used for deploying apps in Kubernetes tenancies for Azimuth's newly released apps-only mode. It works by templating out Flux resources based on the application's specification, which are created on Azimuth management clusters but have access to a kubeconfig secret which Flux uses to install the underlying Helm chart onto the user's tenant Kubernetes cluster. Using Flux provides the advantage of adding resiliency to applications by managing them through Flux's reconciliation loop, as opposed to the one-and-done approach of the Helm tooling in our existing operators.

Slinky can be packaged this way, with Flux first installing its CertManager dependency, then deploying Slurm Operator followed by a Slurm control plane. One challenge this did introduce was that additional HelmRelease resources were required for each dependency, which conflicts with Azimuth's single chart model. The obvious solution is to package the charts under an umbrella Helm chart and use FluxCD to install that instead. However, the operator is designed to install HelmReleases targetting tenant clusters into its management cluster, whereas the HelmRelease resources this umbrella chart packages must be installed in the management cluster alongside the Flux controllers. The apps operator therefore needed to be modified to allow a pattern where Helm charts install Flux resources in a local cluster but contain a field in their values files to allow specifying a kubeconfig file to be passed through to the consituent HelmRelease objects it installs.

By offering Slinky as an Azimuth app (compatible with the new standalone mode) we hope to lower the barrier to entry for Slurm, and allow for HPC clusters to be used as efficiently as possible.

Get in touch

If you would like to get in touch we would love to hear from you. Reach out to us via BlueSky, LinkedIn or directly via our contact page.