For optimal reading, please switch to desktop mode.

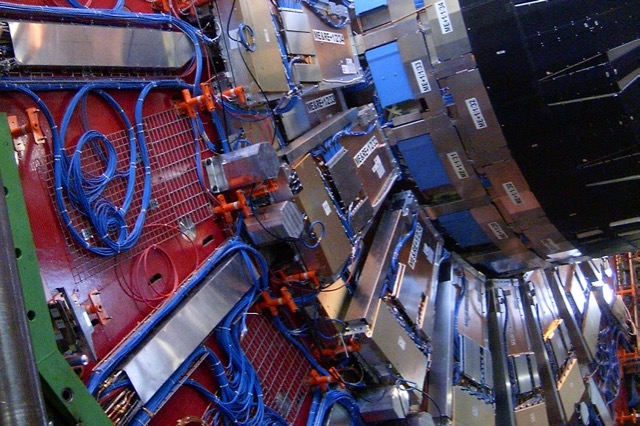

Housed at Cambridge University, the ALaSKA SDP Performance Prototype Platform has been quick to take advantage of the Big Data Cooperation Agreement signed over the summer with CERN. ALaSKA uses OpenStack to deliver a flexible but performant bare metal compute enviroment to enable SKA project scientists to experiment with and explore software technologies and make objective performance comparisons.

The ALaSKA system uses several OpenStack technologies that are already in full-scale production at CERN. Conversely, to develop ALaSKA's capability some advanced technologies have been developed by the StackHPC team managing ALaSKA. The CERN team have identified several areas where ALaSKA's experience can inform the ongoing development of CERN's compute infrastructure, particularly as they scale up to meet the challenges of the forthcoming high-luminosity upgrade.

Following the collaboration, on several occasions through the autumn CERN and SKA project staff have met to talk over the practical details of sharing effort, and StackHPC's team was delighted to participate in the discussions relating to OpenStack. These discussions led to a co-presentation at OpenStack Sydney by Stig and Belmiro Moreira, a Cloud Architect in the team at CERN.

Stig will also be elaborating on the same topic at the Research Councils UK Cloud Workshop 2018 at the Francis Crick institute in London on 8th January 2018.

Beyond meeting the goals of major flagship science programmes, this collaboration speaks to a wider unmet need among the growing Scientific OpenStack community for OpenStack infrastructure that enables the next generation of flexible HPC.

A number of key priorities have been identified so far:

- Using Ironic for infrastructure management.

- Better support for bare metal compute resources in Magnum.

- High performance Ceph, especially using RDMA-enabled interconnects.

- Intelligent worklaod scheduling in federated environments.

- Opportunistic "spot" instances and preemption in OpenStack.

Enter the Scientific SIG

The teams from CERN and the SKA see these issues as far from specific to their use cases, and are certainly not the only people around the world working on making OpenStack even better for research computing.

Through the Scientific SIG, the teams are starting to coordinate activities between themselves, and also with other research institutions active in the Scientific OpenStack community.

This open collaboration will be actively discussed during the Scientific SIG's scheduled session at the Project Teams Gathering (PTG), coming up in Dublin, February 26th - March 2nd 2018.