For optimal reading, please switch to desktop mode.

This case study was contributed to the Open Infrastructure for AI white paper, which can be accessed here.

AI workloads demand some of the most performance-intensive infrastructure available on the market today. Harnessing the full potential of such infrastructure is critical to maximising returns on very significant hardware and software investments.

6G AI Sweden has the ambition to provide Swedish companies with world-class AI capabilities, whilst maintaining absolute data sovereignty. To realise this ambition, 6G AI Sweden selected a technology stack centred around OpenStack, Kubernetes and open infrastructure solutions, designed and deployed in partnership with StackHPC.

Hardware Infrastructure

Compute

6G AI Sweden's AI compute nodes are based on the Nvidia "HGX" reference architecture:

- 8x Nvidia H200 GPUs

- 8x 400G NDR Infiniband high-speed networking

- 8x local NVME storage, local to each GPU

- 2x200G Bluefield-3 Ethernet smart NIC

- 1G Ethernet for provisioning

- Dedicated BMC connection

All server hardware is managed using the industry-standard Redfish protocol.

A dedicated hypervisor resource provides virtualised compute for control, monitoring and supporting functions.

Networking

6G AI Sweden's high-speed Infiniband fabric is implemented with 400G NDR Infiniband from Nvidia, implemented as a multi-rail network interconnecting the H200 GPU devices.

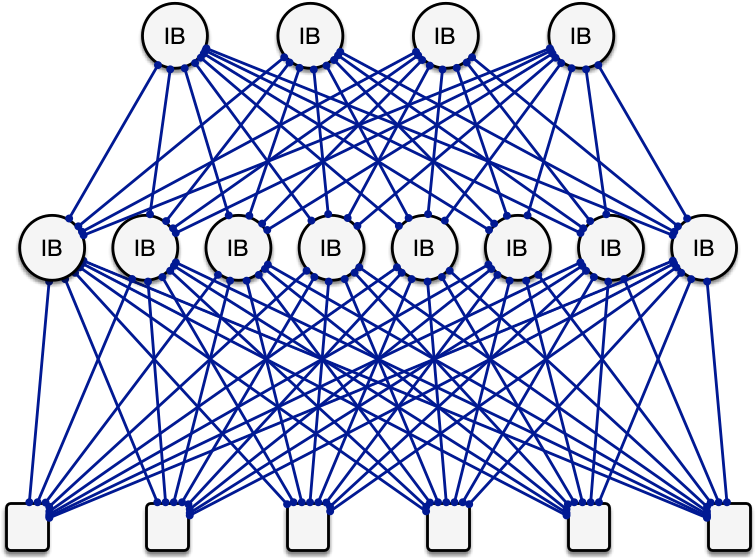

An example Infiniband network fabric, in a fat tree topology, in which each compute node (bottom row) has 8 Infiniband links. Multiple links may exist between switches to produce a non-blocking network, in which all nodes could communicate simultaneously at full network bandwidth.

The infrastructure's Ethernet network, again from Nvidia, is based on 800G switches and network fabric, broken out into 2x200G bonded links connecting the compute nodes and storage.

In addition, management Ethernet networks exist for server provisioning, control and power/management.

Storage

6G AI Sweden selected high-performance storage from VAST data.

VAST Data storage provides the 6G AI cloud with object, block and file storage services using industry standard protocols. In addition, VAST Data storage provides self-service flexibility, multi-tenancy and industry-leading performance.

OpenStack Cloud Infrastructure

For AI use cases a significant advantage of OpenStack is its native support for bare metal, virtualisation and containerisation, all couched within the same reconfigurable infrastructure. OpenStack's multi-tenancy model and fine-grained policy is also well-suited to cloud-native service providers for AI infrastructure.

OpenStack Kayobe provisions clouds using infrastructure-as-code principles. Hardware configuration, OS provisioning and network configuration are all defined using a single version-controlled source repo. OpenStack services are deployed and configured via Kolla-Ansible. Kayobe's precise configuration and management of infrastructure was selected for its flexibility, ease of operation and built-in support for high-performance computing.

For the AI compute nodes a bare metal cloud infrastructure was created using OpenStack Ironic. The servers are deployed using Ironic's virtual media driver, streamlining the bootstrap process and avoiding the need for DHCP and iPXE steps during the deployment.

Multi-tenant isolation is a critical requirement for 6G AI's business model. Bare metal compute infrastructure is provisioned into client tenancies. Ironic implements functionality for effective management of bare metal in a multi-tenant environment, such as configurable steps for deployment and cleaning.

Neutron networking is implemented using OVN. Multi-tenant network isolation is implemented using virtual tenant networks, connecting a tenant's virtual machines and bare metal compute nodes.

- Tenant networks in Ethernet are VLANs, enabling easy intercommunication between bare metal and virtualised compute resources. Multi-tenant Ethernet network isolation is implemented using the networking-generic-switch Neutron driver. Separate networks for cleaning and provisioning keep other phases of the infrastructure lifecycle safely behind the scenes.

- Tenant networks in Infiniband are partitions, allocated as partition keys (pkeys) and managed with the networking-mellanox Neutron driver, integrated with Nvidia's Unified Fabric Manager (UFM).

The VAST Data storage provides backing services for Glance, Cinder and Manila, plus S3 object storage for tenant workloads. For virtualised infrastructure, block storage is implemented using VAST Data's newly-developed NVME-over-TCP Cinder driver.

File storage is implemented using VAST Data's Manila driver, providing multi-tenant orchestration of high-performance file storage, all using the latest optimisations of industry-standard NFS.

Azimuth and Kubernetes

World-class AI capabilities require world-class compute platforms, building on high-performance infrastructure to enable users to get straight to AI productivity. StackHPC is the lead developer and custodian of the Azimuth Cloud Portal, an intuitive portal for providing compute platforms on a self-service basis. Azimuth is free and open source software, and StackHPC offers services for deployment, configuration, extension, maintenance, and support of the project.

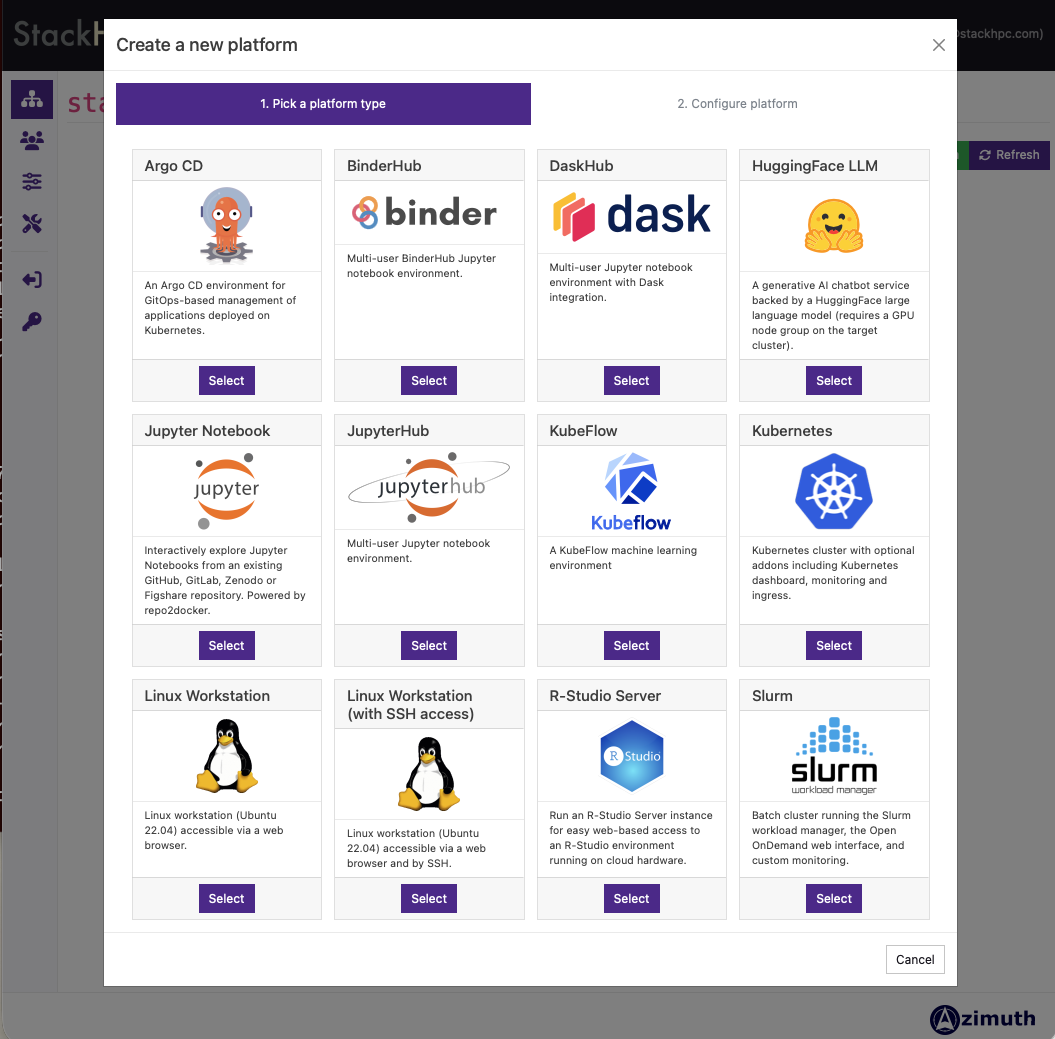

Platform creation in Azimuth involves selecting from a curated set of predefined infrastructure-as-code recipes, ready configured with deep integrations into the underlying infrastructure.

Most AI workloads are deployed as containerised applications using Kubernetes. In the 6G AI cloud, Kubernetes clusters span VM resources and bare metal AI compute nodes. Kubernetes can be deployed as a platform in Azimuth, or as a gitops-driven workflow via FluxCD. In both cases, Kubernetes is deployed using industry-standard Cluster API and configured using Helm. 6G AI Sweden provides the Nvidia NGC Catalog of containerised software applications and Helm charts.

Get in touch

If you would like to get in touch we would love to hear from you. Reach out to us via Bluesky, LinkedIn or directly via our contact page.