Kayobe: An Introduction

Kayobe is an official OpenStack project, and as such adheres to the four opens. It is core to how StackHPC delivers and manages the OpenStack systems we work on.

Using Kayobe allows us to:

- Deploy and configure containerised OpenStack to bare metal nodes.

- Rapidly provision nodes at scale from a single host.

- Build systems designed around performance, sustainability, reliability, and scalability.

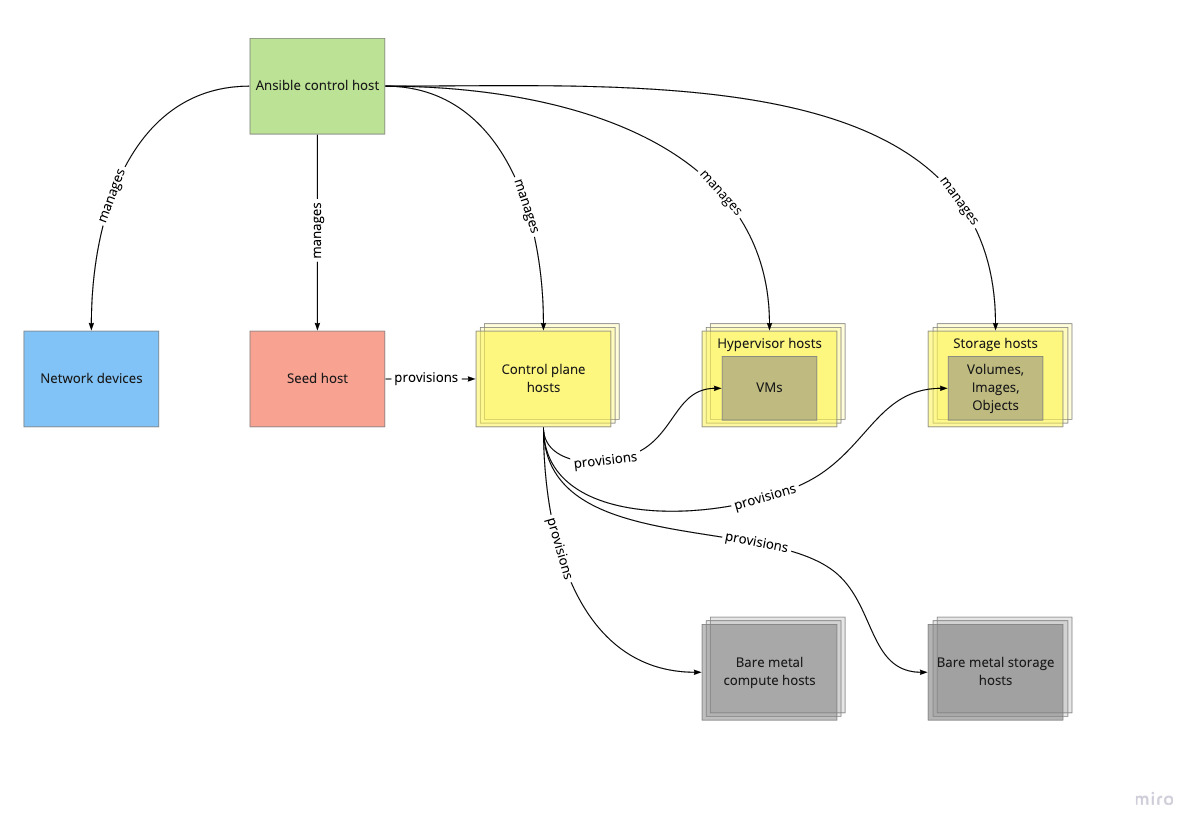

An example of how a hybrid system of bare metal and virtualised nodes is managed and provisioned by Kayobe

Kayobe: Built on Industry-tested Technologies

Kayobe is built around a set of tried and tested tools (Kolla, Kolla-Ansible, and Bifrost). This is also where it derives its name. Kolla on Bifrost abbreviated becomes K.o.B.

Ansible: Familiar Playbook Technology

Used at every level, the result is a universally consistent interface and skill set. Doing this is an enabler for defining and configuring system infrastructure as code.

Kolla: Production-ready Containers

Each process is executed in a separate container. This approach is considered by many (StackHPC included) to be industry and community best practice. Doing this allows us to operate scalable, fast, reliable, and sustainable OpenStack clouds.

Kolla-Ansible: Highly Opinionated AND Completely Customisable

Operators with minimal experience are able to embrace and deploy OpenStack quickly. Then as their experience grows, allows for modification of the OpenStack configuration to suit their exact requirements.

Bifrost: Automated Deployments

With Bifrost a base image can be deployed onto a set of known hardware. It performs this using Ansible playbooks and Ironic to achieve a highly automated deployment mechanism. In this use case, its purpose is to image all the unformatted nodes with an Operating System ready for OpenStack.

The Kayobe Advantage

Kayobe is greater than the sum of its parts. It unifies a set of industry tested technologies into a cohesive framework. The result is a tool that delivers well-defined infrastructure as code. A tool that offers practical advantages over using its component tools independently to achieve the same goals.

Streamlining and Automation

Kayobe's combined toolset effectively replaces complicated multi-step processes with single commands. This simplifies and streamlines common and critical tasks.

User Friendly CLI

Kayobe’s Command Line Interface (CLI) provides straightforward verbose commands. The scripts and APIs are made readily accessible. This lowers the bar to entry and provides a shallower learning curve for new operators.

Further Opinionation

Kayobe’s default settings and configurations, automation and APIs are informed by community best practice. Their combined learnings have been baked into the toolset. This provides new operators with a safe on-boarding point before they gain the experience and confidence to customise for themselves. It also means they are not required to arrive at a working order of operations for themselves.

A System Seeded from a Single Host

Kayobe simplifies the bootstrapping of an OpenStack system. An operator can swiftly build and execute an effective playbook for a set of cleanly racked nodes, from a list of hostnames and IP addresses. This allows your whole system to be managed and deployed from just one seed node.

An example of a 3 rack system managed and provisioned using Kayobe with a high-availability control plane

Scalability and Flexibility

Kayobe allows us to add additional nodes, to allocate resources, and to grow an OpenStack system safely and effectively. Scalability and flexibility are at the heart of Kayobe. There is no need to redeploy the entire stack when adding more nodes or changing configurations.

Designed to Meet the Needs of Research Computing

A significant motivation for Kayobe development was to meet the operational and performance demands of the Square Kilometre Array (SKA) and in particular the Science Data Processor (SDP). From this crucible a hardware and software prototype system - AlaSKA - was forged. Kayobe has been further developed to address the gaps of existing tools to meet these exacting requirements. There is still more to do, but there is now a consistent architectural framework and scope.

You can read more about this here (part 1) and here (part 2) on our blog.

The result of this is that Kayobe fits naturally in the space where HPC, AI and HPDA converge. Kayobe deployments are well suited to particular patterns of use and operation often found in research computing - specifically Slurm (or similar) and Kubernetes. They are based on modern cloud-native methods, automated by Ansible, provisioned by Ironic, and multi-cloud ready.

Support for Virtual and Bare Metal Compute

Whatever the use cases, user needs, or workloads Kayobe can be configured to accommodate them. This allows us to create and manage hybrid systems able to support a wide range of users, tailored to their requirements.

Improved Discovery, Inventory and Provisioning

Kayobe automates much of the usual complexity involved in discovery, inventory and provisioning of system hardware. This makes cloud deployment and provisioning simpler and faster.

Kayobe and StackHPC: Find Out More

If you are interested in Kayobe and how it might work for you, or StackHPC and our services, then please don’t hesitate to contact us.

You can also find us on the OpenStack Foundation marketplace.